Generative AI Doesn’t Simplify Hardware Challenges

-

目次

“Generative AI: Amplifying Innovation, Not Simplifying Hardware”

導入

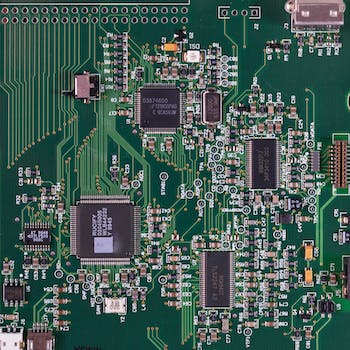

Generative AI, while revolutionizing the field of artificial intelligence by enabling machines to create content and solve problems with minimal human intervention, does not inherently simplify the complexities associated with hardware. Despite its advanced capabilities in generating text, images, and other forms of media, the deployment and operation of generative AI models still face significant hardware challenges. These include the need for high computational power, energy efficiency, data storage, and the management of heat dissipation, which are critical for maintaining the performance and sustainability of AI systems. As such, while generative AI continues to push the boundaries of what machines can do, it also demands substantial advancements in hardware technology to fully realize its potential.

Impact of Generative AI on Semiconductor Design Complexity

Title: Generative AI Doesn’t Simplify Hardware Challenges

The advent of generative artificial intelligence (AI) has ushered in a new era of possibilities in various fields, including semiconductor design. However, it is crucial to understand that while generative AI can significantly enhance the design process, it does not inherently simplify the underlying hardware challenges. In fact, the integration of generative AI often increases the complexity of semiconductor design, demanding more from the hardware that supports it.

Generative AI, particularly in the context of semiconductor design, involves algorithms that can generate new designs or optimize existing ones based on a set of parameters and objectives. This capability is revolutionary as it allows for rapid prototyping and iterative testing without the need for extensive human intervention. However, the sophistication of these AI models requires advanced hardware architectures that can handle large volumes of data and complex computations efficiently.

The primary challenge lies in the need for high-performance computing (HPC) systems to train and run these AI models. These systems must be equipped with powerful processors, high-speed memory solutions, and efficient data transfer capabilities. The complexity of the semiconductor design increases as these elements must not only be present but also be optimized to work in harmony to avoid bottlenecks that can degrade the performance of AI applications.

Moreover, the deployment of generative AI in semiconductor design necessitates a reevaluation of power consumption and heat dissipation strategies. AI-driven tools are resource-intensive, and as they scale, the power requirements and thermal outputs also increase. This escalation requires innovative approaches to power management and cooling techniques, which adds another layer of complexity to the hardware design.

Additionally, the reliability and accuracy of AI-generated designs must be thoroughly validated against standard benchmarks to ensure they meet the rigorous demands of real-world applications. This validation process itself is complex and resource-intensive, requiring additional computational resources to simulate and test each design iteration under a variety of conditions.

Transitioning from the challenges to potential solutions, it is evident that addressing these issues requires a multidisciplinary approach. Collaboration between AI researchers, hardware engineers, and system architects is essential to create optimized hardware solutions that can support the advanced capabilities of generative AI. This collaboration can lead to the development of specialized AI accelerators, such as GPUs and TPUs, which are designed specifically to enhance the efficiency of AI computations.

Furthermore, advancements in semiconductor materials and manufacturing techniques can also play a crucial role in meeting the demands of generative AI. For instance, the use of newer semiconductor materials like gallium nitride (GaN) and silicon carbide (SiC) can improve the efficiency and performance of electronic components at higher frequencies and lower voltages.

In conclusion, while generative AI holds tremendous potential to revolutionize semiconductor design by automating and optimizing complex processes, it does not simplify the challenges associated with hardware design. Instead, it introduces new dimensions of complexity that require innovative solutions in hardware architecture, power management, and material science. The future of semiconductor design, therefore, lies not only in the advancement of AI technologies but also in the concurrent evolution of the hardware that supports them.

Challenges of Integrating Generative AI into Existing Hardware Systems

Generative AI, a subset of artificial intelligence focused on creating new content ranging from text to images and beyond, has been heralded as a transformative force in technology. However, its integration into existing hardware systems presents a myriad of challenges that are often underestimated. These challenges stem from the unique demands generative AI places on hardware, particularly in terms of computational power and data handling capabilities.

Firstly, generative AI models, such as those based on deep learning architectures like GANs (Generative Adversarial Networks) and transformers, require significant computational resources. These models involve large numbers of parameters and complex matrix operations, which can be taxing even for high-end processors. Traditional CPUs (Central Processing Units), commonly found in most existing systems, are often inadequate for these tasks due to their limited parallel processing capabilities. Instead, GPUs (Graphics Processing Units) or specialized AI accelerators like TPUs (Tensor Processing Units) are preferred because of their ability to handle multiple operations concurrently. This necessitates either a substantial upgrade of existing hardware or the integration of new, specialized components, which can be cost-prohibitive and logistically complex.

Moreover, the integration of generative AI into existing systems is not merely a matter of swapping out old processors for new ones. Compatibility issues often arise, as newer AI-optimized hardware may not seamlessly interface with older system architectures. This can lead to significant software and firmware updates, which require additional time and expertise to implement. Furthermore, these updates can introduce new vulnerabilities and stability issues, complicating the deployment process even further.

Another critical aspect is the data throughput required by generative AI applications. These systems often need to process and generate large volumes of data at high speeds, necessitating robust data storage and retrieval systems. Traditional data storage solutions may not be up to the task, especially when it comes to handling the input/output operations per second (IOPS) that high-performance AI systems demand. This might require the adoption of faster SSDs (Solid State Drives) or even more exotic storage solutions like in-memory databases, which again adds to the complexity and cost of system upgrades.

Additionally, the power consumption of generative AI models can be substantial. As these models become more complex and are tasked with processing ever-larger datasets, the energy required to power them increases. This can strain the power delivery systems of existing hardware setups and may require the installation of additional cooling solutions to dissipate the increased heat generated by more powerful processors. The environmental impact of scaling up these resources also cannot be ignored, as higher power consumption translates directly into higher carbon emissions unless renewable energy sources are used.

Finally, the maintenance and scalability of hardware systems running generative AI applications pose their own set of challenges. As AI models evolve and improve, hardware systems need to be adaptable enough to scale with these advancements. This often means that hardware systems must be designed with future upgrades in mind, which can be difficult to predict given the rapid pace of AI research and development.

In conclusion, while generative AI offers exciting possibilities, its integration into existing hardware systems is fraught with challenges. These range from the need for more powerful and compatible hardware to issues with data throughput, power consumption, and system scalability. Addressing these challenges requires a thoughtful approach to system design and resource allocation, underscoring the fact that advancements in AI capabilities do not automatically simplify the underlying hardware requirements.

The Limitations of Generative AI in Overcoming Physical Hardware Constraints

Generative AI Doesn’t Simplify Hardware Challenges

The advent of generative artificial intelligence (AI) has ushered in transformative changes across various sectors, from automating routine tasks to generating complex data-driven outputs. However, the notion that generative AI can alleviate the complexities inherent in hardware design and operation is overly optimistic. This misconception overlooks the fundamental limitations of AI when confronted with the physical and practical constraints of hardware systems.

Generative AI operates by learning from vast datasets to produce new content or solutions that mimic the original data’s style, structure, and functionality. While this capability is revolutionary, its effectiveness is inherently tied to the quality and scope of the data it is trained on. This dependency underscores the first significant limitation: data does not always perfectly represent the physical constraints and real-world variability in hardware environments. For instance, AI models trained to optimize circuit design might not account for unforeseen physical interactions at nano-scale levels, which can only be captured through empirical testing and not through data alone.

Moreover, hardware challenges often involve non-linear and dynamic problems that are difficult to model accurately in a virtual environment. Thermal dynamics, electromagnetic interference, and material fatigue are examples where real-world testing and iterative prototypes are indispensable. Generative AI can propose designs that appear optimal in a simulated environment but fail under real-world operating conditions. This discrepancy arises because current AI technologies lack the capability to fully understand and integrate complex physical laws and real-time feedback into their algorithms.

Transitioning from the theoretical to the practical, the integration of AI-generated solutions into existing hardware systems presents another layer of complexity. Hardware systems are often constrained by legacy issues, such as compatibility with existing components and adherence to industry-specific regulations, which may not be fully comprehensible to an AI system. The risk is that AI might generate solutions that are theoretically sound but practically infeasible, leading to increased costs and delayed project timelines as teams work to reconcile AI proposals with practical hardware constraints.

Furthermore, the reliance on generative AI for hardware applications raises concerns about the predictability and reliability of the outcomes. Since AI systems can generate a vast array of solutions, the onus remains on human engineers to validate and refine these solutions, ensuring they meet stringent safety and performance standards. This necessity underscores the fact that AI is not a panacea but rather a tool that must be wielded with a deep understanding of its limitations and potential biases.

In conclusion, while generative AI holds considerable promise for enhancing efficiency and innovation in hardware development, it is not a straightforward solution to the complex challenges that hardware presents. The physical world imposes constraints that are currently beyond the reach of AI’s capabilities. Effective integration of AI into hardware development requires a balanced approach that combines AI’s computational power with empirical engineering insights. As we continue to advance in our AI capabilities, it remains crucial to maintain a realistic perspective on its role and limitations in the context of hardware innovation. This balanced approach will ensure that we harness the benefits of AI without overlooking the irreplaceable value of human expertise and real-world testing in the domain of hardware development.

結論

Generative AI does not inherently simplify hardware challenges; instead, it shifts and transforms them. While generative AI models can automate and optimize certain tasks, they require substantial computational resources for training and operation, leading to increased demands on hardware infrastructure. This necessitates advancements in processor technology, energy efficiency, and system scalability. Additionally, the deployment of generative AI in real-world applications often requires specialized hardware to meet performance and efficiency criteria, further complicating hardware design and implementation. Thus, rather than simplifying hardware challenges, generative AI introduces new complexities and demands for innovation in hardware development.